Gradient Descent Algorithm Optimization and its Application in Linear Regression Model

DOI:

https://doi.org/10.5281/zenodo.13753916ARK:

https://n2t.net/ark:/40704/AJNS.v1n1a01References:

12Keywords:

Gradient Descent Algorithm, Linear Regression Model, Optimization Methods, Parameter Estimation, Big Data EnvironmentAbstract

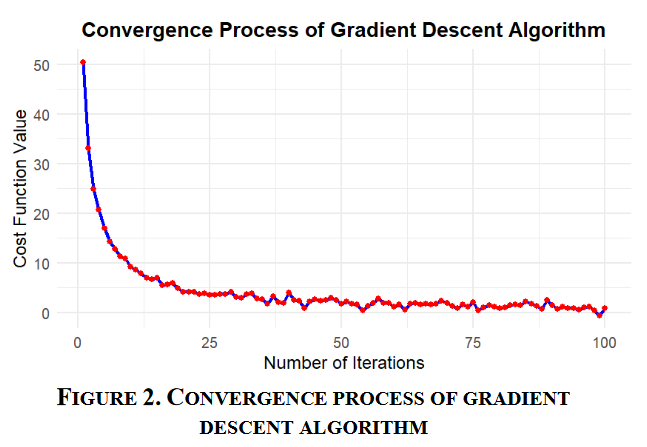

This paper systematically analyzes the application of gradient descent algorithm in linear regression model and proposes a variety of optimization methods. The basic concepts and mathematical expressions of linear regression model are introduced, and the basic principles and mathematical derivation of gradient descent algorithm are explained. The specific application of gradient descent algorithm in parameter estimation and model optimization is discussed, especially in big data environment. Several optimization methods of gradient descent algorithm are proposed, including learning rate adjustment, momentum method, RMSProp and Adam optimization algorithm. This paper discusses the advantages and disadvantages of gradient descent algorithm and its challenges in practical application, and proposes future research directions. The research results show that the improved gradient descent algorithm has higher computational efficiency and better convergence when processing large-scale data and complex models.

Downloads

Metrics

References

Su, X., Yan, X., & Tsai, C. L. (2012). Linear regression. Wiley Interdisciplinary Reviews: Computational Statistics, 4(3), 275-294.

Mandic, D. P. (2004). A generalized normalized gradient descent algorithm. IEEE signal processing letters, 11(2), 115-118.

Mason, L., Baxter, J., Bartlett, P., & Frean, M. (1999). Boosting algorithms as gradient descent. Advances in neural information processing systems, 12.

Wang, X., Yan, L., & Zhang, Q. (2021, September). Research on the application of gradient descent algorithm in machine learning. In 2021 International Conference on Computer Network, Electronic and Automation (ICCNEA) (pp. 11-15). IEEE.

Hinton, G., Srivastava, N., & Swersky, K. (2012). Neural networks for machine learning lecture 6a overview of mini-batch gradient descent. Cited on, 14(8), 2.

Khirirat, S., Feyzmahdavian, H. R., & Johansson, M. (2017, December). Mini-batch gradient descent: Faster convergence under data sparsity. In 2017 IEEE 56th Annual Conference on Decision and Control (CDC) (pp. 2880-2887). IEEE.

Zinkevich, M., Weimer, M., Li, L., & Smola, A. (2010). Parallelized stochastic gradient descent. Advances in neural information processing systems, 23.

Ketkar, N., & Ketkar, N. (2017). Stochastic gradient descent. Deep learning with Python: A hands-on introduction, 113-132.

Muehlebach, M., & Jordan, M. I. (2021). Optimization with momentum: Dynamical, control-theoretic, and symplectic perspectives. Journal of Machine Learning Research, 22(73), 1-50.

Zou, F., Shen, L., Jie, Z., Zhang, W., & Liu, W. (2019). A sufficient condition for convergences of adam and rmsprop. In Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition (pp. 11127-11135).

Xu, D., Zhang, S., Zhang, H., & Mandic, D. P. (2021). Convergence of the RMSProp deep learning method with penalty for nonconvex optimization. Neural Networks, 139, 17-23.

Zaheer, M., Reddi, S., Sachan, D., Kale, S., & Kumar, S. (2018). Adaptive methods for nonconvex optimization. Advances in neural information processing systems, 31.

Downloads

Published

How to Cite

Issue

Section

ARK

License

Copyright (c) 2024 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.