Weight Initialization Methods in Convolutional Neural Networks and Their Impact on Training Efficiency

DOI:

https://doi.org/10.5281/zenodo.13755632ARK:

https://n2t.net/ark:/40704/AJNS.v1n1a02References:

8Keywords:

Convolutional Neural Networks, Weight Initialization, Training Efficiency, Deep Learning, Performance AnalysisAbstract

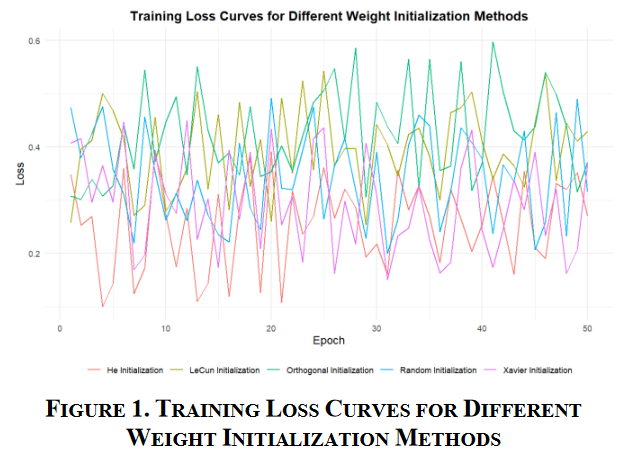

This study analyzes the application effects of different weight initialization methods in convolutional neural networks, focusing on the impact of these methods on training efficiency. Through analysis and derivation from existing studies, we expect significant differences in training speed, stability, and final performance among different initialization methods. Specifically, this study compared random initialization, Xavier initialization, He initialization, LeCun initialization, and Orthogonal initialization. The results show that choosing an appropriate weight initialization method can significantly improve the training efficiency and performance of the model. The main contribution of this article is to systematically compare several common weight initialization methods and propose performance expectations based on existing research to provide a reference for subsequent research. Future research can verify these expected results through actual experiments and explore novel initialization strategies to further improve the training efficiency and performance of the model.

Downloads

Metrics

References

Gu, J., Wang, Z., Kuen, J., Ma, L., Shahroudy, A., Shuai, B., ... & Chen, T. (2018). Recent advances in convolutional neural networks. Pattern recognition, 77, 354-377.

Camacho, I. C., & Wang, K. (2022). Convolutional neural network initialization approaches for image manipulation detection. Digital Signal Processing, 122, 103376.

Datta, L. (2020). A survey on activation functions and their relation with xavier and he normal initialization. arXiv preprint arXiv:2004.06632.

Kumar, S. K. (2017). On weight initialization in deep neural networks. arXiv preprint arXiv:1704.08863.

He, J., Lan, M., Tan, C. L., Sung, S. Y., & Low, H. B. (2004, July). Initialization of cluster refinement algorithms: A review and comparative study. In 2004 IEEE international joint conference on neural networks (IEEE Cat. No. 04CH37541) (Vol. 1, pp. 297-302). IEEE.

Boulila, W., Driss, M., Alshanqiti, E., Al-Sarem, M., Saeed, F., & Krichen, M. (2022). Weight initialization techniques for deep learning algorithms in remote sensing: Recent trends and future perspectives. Advances on Smart and Soft Computing: Proceedings of ICACIn 2021, 477-484.

Hu, W., Xiao, L., & Pennington, J. (2020). Provable benefit of orthogonal initialization in optimizing deep linear networks. arXiv preprint arXiv:2001.05992.

Choromanski, K., Downey, C., & Boots, B. (2018, February). Initialization matters: Orthogonal predictive state recurrent neural networks. In International Conference on Learning Representations.

Downloads

Published

How to Cite

Issue

Section

ARK

License

Copyright (c) 2024 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.