A Review of Feature Engineering Methods in Regression Problems

DOI:

https://doi.org/10.5281/zenodo.13905622ARK:

https://n2t.net/ark:/40704/AJNS.v1n1a06References:

16Keywords:

Data Preprocessing, Feature Selection, Feature Scaling, Deep LearningAbstract

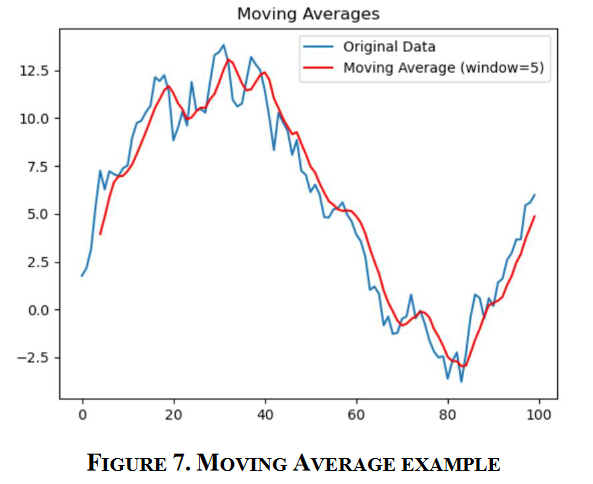

This paper comprehensively reviews the importance of feature engineering in regression problems and its existing methods. Different feature processing techniques, including data transformation, interactive feature generation, dimensionality reduction, and technical indicators, are analyzed, and some existing research results are combined to discuss how these techniques affect the predictive ability of the model. By systematically summarizing the advantages and disadvantages of various methods, this review provides researchers and practitioners with a comprehensive understanding of existing feature engineering techniques. In addition, this paper also explores the existing research gaps and possible future development directions, and proposes more application scenarios and research topics for related technologies.

Downloads

Metrics

References

Dong, G., & Liu, H. (Eds.). (2018). Feature engineering for machine learning and data analytics. CRC press.

Islam, N. (2024). DTization: A new method for supervised feature scaling. arXiv preprint arXiv:2404.17937.

Bengio, Y., Courville, A., & Vincent, P. (2013). Representation learning: A review and new perspectives. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(8), 1798-1828. https://doi.org/10.1109/TPAMI.2013.50.

Gu, Y., Yan, D., Yan, S., & Jiang, Z. (2020). Price forecast with high-frequency finance data: An autoregressive recurrent neural network model with technical indicators. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management (CIKM '20) (pp. 2485–2492). Association for Computing Machinery. https://doi.org/10.1145/3340531.3412738.

Duch, W. (2006). Filter methods. In I. Guyon, M. Nikravesh, S. Gunn, & L. A. Zadeh (Eds.), Feature extraction (Studies in Fuzziness and Soft Computing, Vol. 207, pp. 89-117). Springer. https://doi.org/10.1007/978-3-540-35488-8_4

Kohavi, R., & John, G. H. (1997). Wrappers for feature subset selection. Artificial intelligence, 97(1-2), 273-324. https://doi.org/10.1016/S0004-3702(97)00043-X.

Saeys, Y., Inza, I., & Larrañaga, P. (2007). A review of feature selection techniques in bioinformatics. bioinformatics, 23(19), 2507-2517. https://doi.org/10.1093/bioinformatics/btm344

Jolliffe, I. T., & Cadima, J. (2016). Principal component analysis: a review and recent developments. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 374(2065), 20150202. https://doi.org/10.1098/rsta.2015.0202

Hyvärinen, A., & Oja, E. (2000). Independent component analysis: algorithms and applications. Neural networks, 13(4-5), 411-430. https://doi.org/10.1016/S0893-6080(00)00026-5

Izenman, A. J. (2013). Linear discriminant analysis. In Modern multivariate statistical techniques (pp. 237-280). Springer, New York, NY.

Micci-Barreca, D. (2001). A preprocessing scheme for high-cardinality categorical attributes in classification and prediction problems. ACM SIGKDD Explorations Newsletter, 3(1), 27-32. https://doi.org/10.1145/507533.507538

Little, R. J., & Rubin, D. B. (2002). Statistical analysis with missing data (2nd ed.). John Wiley & Sons.

Friedman, J. H. (1991). Multivariate adaptive regression splines. The Annals of Statistics, 19(1), 1-67. https://doi.org/10.1214/aos/1176347963

Gelman, A. (2008). Scaling regression inputs by dividing by two standard deviations. Statistics in Medicine, 27(15), 2865-2873. https://doi.org/10.1002/sim.3107

Han, J., Pei, J., & Kamber, M. (2011). Data mining: Concepts and techniques (3rd ed.). Morgan Kaufmann.

Kuhn, M., & Johnson, K. (2013). Applied predictive modeling. Springer.

Downloads

Published

How to Cite

Issue

Section

ARK

License

Copyright (c) 2024 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.