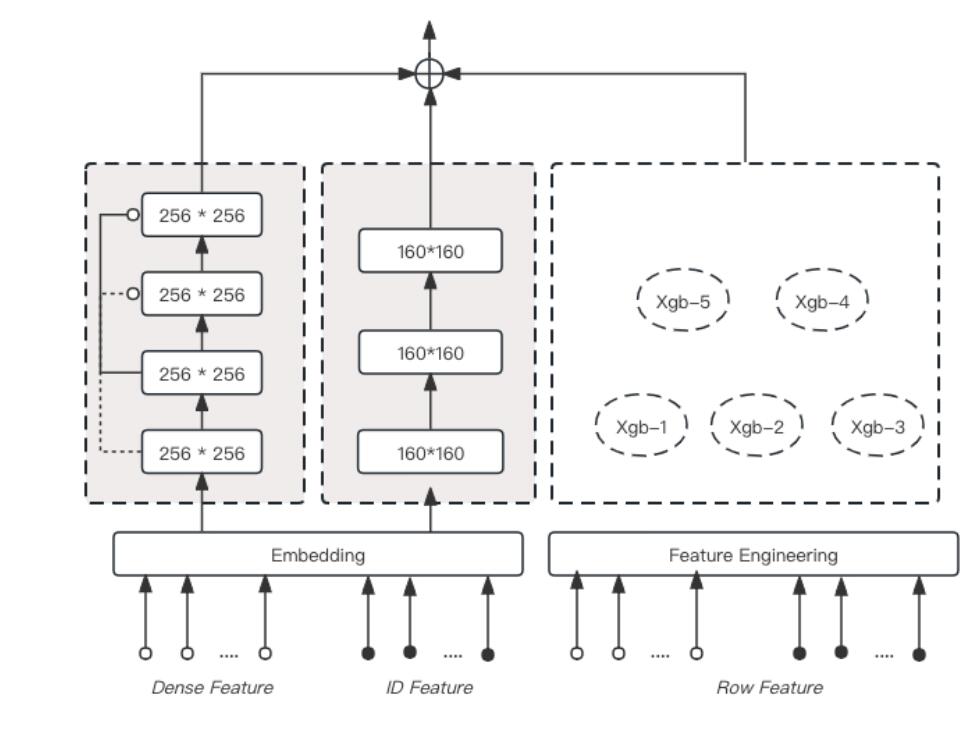

Ensemble Fusion: Optimizing Market Prediction with Neural Networks, Residual Networks and Xgboost

DOI:

https://doi.org/10.5281/zenodo.11060009References:

24Keywords:

Quantitative Trading, Market Prediction, Ensemble Learning, Neural Networks, Resnet, XgboostAbstract

In the realm of financial markets, accurate prediction of market trends plays a pivotal role in guiding investment decisions and maximizing returns. This paper presents an innovative ensemble model that combines neural networks (NN), residual networks (Resnet), and Xgboost, offering a comprehensive approach to market prediction. Through extensive experimentation and evaluation, our ensemble model demonstrates remarkable performance enhancements over individual models and other ensemble configurations. By integrating the predictive strengths of NN, Resnet, and Xgboost, our ensemble achieves significant improvements in predictive accuracy, underscoring the potential of ensemble learning in refining market prediction strategies and empowering traders and investors with enhanced decision-making capabilities. This research contributes to advancing the field of quantitative trading by providing a robust and effective framework for market prediction, offering insights and opportunities for practitioners to navigate the complexities of financial markets with greater confidence and success.

References

Lipton, Z. C., Berkowitz, J., & Elkan, C. (2015). A critical review of recurrent neural networks for sequence learning. arXiv preprint arXiv:1506.00019.

Bengio, Y. (2012, June). Deep learning of representations for unsupervised and transfer learning. In Proceedings of ICML workshop on unsupervised and transfer learning (pp. 17-36). JMLR Workshop and Conference Proceedings.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770-778).

Chen, T., & Guestrin, C. (2016, August). Xgboost: A scalable tree boosting system. In Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining (pp. 785-794).

Zhou, Z. H. (2012). Ensemble methods: foundations and algorithms. CRC press.

Torres, J. F., Hadjout, D., Sebaa, A., Martínez-Álvarez, F., & Troncoso, A. (2021). Deep learning for time series forecasting: a survey. Big Data, 9(1), 3-21.

Yao, J., Li, C., Sun, K., Cai, Y., Li, H., Ouyang, W., & Li, H. (2023, October). Ndc-scene: Boost monocular 3d semantic scene completion in normalized device coordinates space. In 2023 IEEE/CVF International Conference on Computer Vision (ICCV) (pp. 9421-9431). IEEE Computer Society.

Géron, A. (2022). Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow. " O'Reilly Media, Inc.".

Hastie, T., Tibshirani, R., Friedman, J. H., & Friedman, J. H. (2009). The elements of statistical learning: data mining, inference, and prediction (Vol. 2, pp. 1-758). New York: springer.

Caruana, R., & Niculescu-Mizil, A. (2006, June). An empirical comparison of supervised learning algorithms. In Proceedings of the 23rd international conference on Machine learning (pp. 161-168).

Yao, J., Pan, X., Wu, T., & Zhang, X. (2024, April). Building lane-level maps from aerial images. In ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 3890-3894). IEEE.

Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural computation, 9(8), 1735-1780.

Breiman, L. (2001). Random forests. Machine learning, 45, 5-32.

Ruder, S. (2016). An overview of gradient descent optimization algorithms. arXiv preprint arXiv:1609.04747.

Wu, C., Cui, J., Xu, X., & Song, D. (2023). The influence of virtual environment on thermal perception: physical reaction and subjective thermal perception on outdoor scenarios in virtual reality. International Journal of Biometeorology, 67(8), 1291-1301.

Schapire, R. E. (1990). The strength of weak learnability. Machine learning, 5, 197-227.

Fama, E. F., & French, K. R. (1993). Common risk factors in the returns on stocks and bonds. Journal of financial economics, 33(1), 3-56.

Peng, Q., Zheng, C., & Chen, C. (2024). A Dual-Augmentor Framework for Domain Generalization in 3D Human Pose Estimation. arXiv preprint arXiv:2403.11310.

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT press.

Yao, J., Wu, T., & Zhang, X. (2023). Improving depth gradient continuity in transformers: A comparative study on monocular depth estimation with cnn. arXiv preprint arXiv:2308.08333.[17] Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT press.

Liu, H., Shen, Y., Yu, S., Gao, Z., & Wu, T. (2024). Deep Reinforcement Learning for Mobile Robot Path Planning. arXiv preprint arXiv:2404.06974.

Ru, J., Yu, H., Liu, H., Liu, J., Zhang, X., & Xu, H. (2022). A Bounded Near-Bottom Cruise Trajectory Planning Algorithm for Underwater Vehicles. Journal of Marine Science and Engineering, 11(1), 7.

Yao, J., Li, C., Sun, K., Cai, Y., Li, H., Ouyang, W., & Li, H. (2023, October). Ndc-scene: Boost monocular 3d semantic scene completion in normalized device coordinates space. In 2023 IEEE/CVF International Conference on Computer Vision (ICCV) (pp. 9421-9431). IEEE Computer Society.

Yao, J., Pan, X., Wu, T., & Zhang, X. (2024, April). Building lane-level maps from aerial images. In ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 3890-3894). IEEE.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Copyright reserved by the author.

This work is licensed under a Creative Commons Attribution 4.0 International License.