Integrating Machine Learning for Optimal Path Planning

DOI:

https://doi.org/10.70393/6a6374616d.323534ARK:

https://n2t.net/ark:/40704/JCTAM.v2n1a04Disciplines:

Artificial IntelligenceSubjects:

Machine LearningReferences:

22Keywords:

Machine Learning, Robotic Vision, Path PlanningAbstract

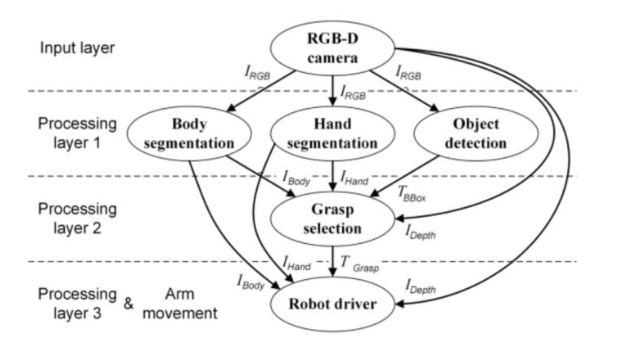

In the area of AI based path planning, the learner is not told which actions to take, as is common in most forms of machine learning. Instead, the learner must discover through trial and error, which actions yield the most rewards. In the most interesting and challenging cases, actions affect not only the immediate rewards but also the next station or subsequent rewards. The characteristics of trial and error searches and delayed reward are two important distinguishing features of RL, which are defined not by characterizing learning methods, but by characterizing a learning problem.

References

Naveed, K. B., Qiao, Z., & Dolan, J. M. (2021, September). Trajectory planning for autonomous vehicles using hierarchical reinforcement learning. In 2021 IEEE International Intelligent Transportation Systems Conference (ITSC) (pp. 601-606). IEEE.

Kosuru, V. S. R., & Venkitaraman, A. K. (2022). Developing a deep Q-learning and neural network framework for trajectory planning. European Journal of Engineering and Technology Research, 7(6), 148-157.

Che, C., & Tian, J. (2024). Maximum flow and minimum cost flow theory to solve the evacuation planning. Advances in Engineering Innovation, 12, 60-64.

Che, C., & Tian, J. (2024). Understanding the Interrelation Between Temperature and Meteorological Factors: A Case Study of Szeged Using Machine Learning Techniques. Journal of Computer Technology and Applied Mathematics, 1(4), 47-52.

Wulfmeier, M., Rao, D., Wang, D. Z., Ondruska, P., & Posner, I. (2017). Large-scale cost function learning for path planning using deep inverse reinforcement learning. The International Journal of Robotics Research, 36(10), 1073-1087

Che, C., & Tian, J. (2024). Analyzing patterns in Airbnb listing prices and their classification in London through geospatial distribution analysis. Advances in Engineering Innovation, 12, 53-59.

Lyridis, D. V. (2021). An improved ant colony optimization algorithm for unmanned surface vehicle local path planning with multi-modality constraints. Ocean Engineering, 241, 109890.

Che, C., & Tian, J. (2024). Game Theory: Concepts, Applications, and Insights from Operations Research. Journal of Computer Technology and Applied Mathematics, 1(4), 53-59.

Paxton, C., Raman, V., Hager, G. D., & Kobilarov, M. (2017, September). Combining neural networks and tree search for task and motion planning in challenging environments. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (pp. 6059-6066). IEEE.

Che, C., & Tian, J. (2024). Methods comparison for neural network-based structural damage recognition and classification. Advances in Operation Research and Production Management, 3, 20-26.

Tian, J., & Che, C. (2024). Automated Machine Learning: A Survey of Tools and Techniques. Journal of Industrial Engineering and Applied Science, 2(6), 71-76.

Che, C., & Tian, J. (2024). Leveraging AI in Traffic Engineering to Enhance Bicycle Mobility in Urban Areas. Journal of Industrial Engineering and Applied Science, 2(6), 10-15.

Zhou, X., Wu, P., Zhang, H., Guo, W., & Liu, Y. (2019). Learn to navigate: cooperative path planning for unmanned surface vehicles using deep reinforcement learning. Ieee Access, 7, 165262-165278.

Cheng, X. (2024). Investigations into the Evolution of Generative AI. Journal of Computer Technology and Applied Mathematics, 1(4), 117-122.

Cheng, X., & Che, C. (2024). Optimizing Urban Road Networks for Resilience Using Genetic Algorithms. Academic Journal of Sociology and Management, 2(6), 1-7.

Kim, B., & Pineau, J. (2016). Socially adaptive path planning in human environments using inverse reinforcement learning. International Journal of Social Robotics, 8, 51-66.

Cheng, X. (2024). Machine Learning-Driven Fraud Detection: Management, Compliance, and Integration. Academic Journal of Sociology and Management, 2(6), 8-13.

Cheng, X., & Che, C. (2024). Interpretable Machine Learning: Explainability in Algorithm Design. Journal of Industrial Engineering and Applied Science, 2(6), 65-70.

Ait Saadi, A., Soukane, A., Meraihi, Y., Benmessaoud Gabis, A., Mirjalili, S., & Ramdane-Cherif, A. (2022). UAV path planning using optimization approaches: A survey. Archives of Computational Methods in Engineering, 29(6), 4233-4284.

Cheng, X. (2024). A Comprehensive Study of Feature Selection Techniques in Machine Learning Models.

Low, E. S., Ong, P., & Cheah, K. C. (2019). Solving the optimal path planning of a mobile robot using improved Q-learning. Robotics and Autonomous Systems, 115, 143-161.

Zolfpour-Arokhlo, M., Selamat, A., Hashim, S. Z. M., & Afkhami, H. (2014). Modeling of route planning system based on Q value-based dynamic programming with multi-agent reinforcement learning algorithms. Engineering Applications of Artificial Intelligence, 29, 163-177.

Downloads

Published

How to Cite

Issue

Section

ARK

License

Copyright (c) 2025 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.