A Differential Privacy-Based Mechanism for Preventing Data Leakage in Large Language Model Training

DOI:

https://doi.org/10.70393/616a736d.323732ARK:

https://n2t.net/ark:/40704/AJSM.v3n2a04Disciplines:

ManagementSubjects:

Human Resource ManagementReferences:

15Keywords:

Large Language Model, Differential Privacy, Data Leakage Prevention, Privacy-preserving Machine LearningAbstract

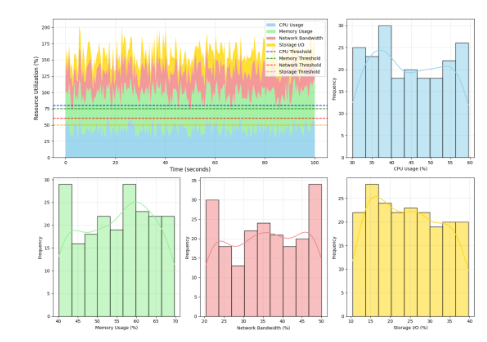

Large Language Models (LLMs) have demonstrated remarkable capabilities in natural language processing tasks, yet they face significant challenges in protecting sensitive information during training. This paper presents a novel differential privacy-based mechanism for preventing data leakage in LLM training processes. The proposed system introduces a dynamic privacy budget allocation strategy integrated with adaptive noise injection mechanisms, specifically designed for transformer architectures. The mechanism implements a multi-layered protection framework that combines real-time monitoring capabilities with automated response systems. Through comprehensive experimental evaluation on models ranging from 100M to 175B parameters, our approach demonstrates superior performance in privacy protection while maintaining model utility. The system achieves a 99.2% detection rate for potential data leakages with a minimal false alarm rate of 0.8%, representing a significant improvement over traditional approaches. Performance analysis reveals that the proposed mechanism maintains model accuracy within 1.8% of non-private baselines while providing strong privacy guarantees. The implementation reduces computational overhead by 35% compared to conventional differential privacy methods. Our research establishes new benchmarks in privacy-preserving machine learning, particularly for large-scale language models, and provides a practical framework for secure AI system deployment.

Downloads

Metrics

References

[1] Goldschmidt, G., Zeiser, F. A., Righi, R. D. R., & Da Costa, C. A. (2023, November). ARTERIAL: A Natural Language Processing Model for Prevention of Information Leakage from Electronic Health Records. In 2023 XIII Brazilian Symposium on Computing Systems Engineering (SBESC) (pp. 1-6). IEEE.

[2] Diaf, A., Korba, A. A., Karabadji, N. E., & Ghamri-Doudane, Y. (2024, April). Beyond Detection: Leveraging Large Language Models for Cyber Attack Prediction in IoT Networks. In 2024 20th International Conference on Distributed Computing in Smart Systems and the Internet of Things (DCOSS-IoT) (pp. 117-123). IEEE.

[3] Peneti, S., & Rani, B. P. (2016, February). Data leakage prevention system with time stamp. In 2016 International Conference on Information Communication and Embedded Systems (ICICES) (pp. 1-4). IEEE.

[4] Jingping, J., Kehua, C., Jia, C., Dengwen, Z., & Wei, M. (2019). Detection and recognition of atomic evasions against network intrusion detection/prevention systems. IEEE Access, 7, 87816-87826.

[5] Wang, Z. Q., Wang, H., & El Saddik, A. (2024). FedITD: A Federated Parameter-Efficient Tuning with Pre-trained Large Language Models and Transfer Learning Framework for Insider Threat Detection. IEEE Access.

[6] Berengueres, J. (2024). How to Regulate Large Language Models for Responsible AI. IEEE Transactions on Technology and Society.

[7] Huang, T., You, L., Cai, N., & Huang, T. (2024, April). Large Language Model Firewall for AIGC Protection with Intelligent Detection Policy. In 2024 2nd International Conference On Mobile Internet, Cloud Computing and Information Security (MICCIS) (pp. 247-252). IEEE.

[8] Ruhländer, L., Popp, E., Stylidou, M., Khan, S., & Svetinovic, D. (2024, August). On the Security and Privacy Implications of Large Language Models: In-Depth Threat Analysis. In 2024 IEEE International Conferences on Internet of Things (iThings) and IEEE Green Computing & Communications (GreenCom) and IEEE Cyber, Physical & Social Computing (CPSCom) and IEEE Smart Data (SmartData) and IEEE Congress on Cybermatics (pp. 543-550). IEEE.

[9] Kaliappan, V. K., Dharunkumar, U. P., Uppili, S., & Bharani, S. (2024, April). SentinelGuard: An Integration of Intelligent Text Data Loss Prevention Mechanism for Organizational Security (I-ITDLP). In 2024 International Conference on Science Technology Engineering and Management (ICSTEM) (pp. 1-6). IEEE.

[10] Gaidarski, I., & Kutinchev, P. (2019, November). Using big data for data leak prevention. In 2019 Big Data, Knowledge and Control Systems Engineering (BdKCSE) (pp. 1-5). IEEE.

[11] Liang, X., & Chen, H. (2019, July). A SDN-Based Hierarchical Authentication Mechanism for IPv6 Address. In 2019 IEEE International Conference on Intelligence and Security Informatics (ISI) (pp. 225-225). IEEE.

[12] Chen, H., & Bian, J. (2019, February). Streaming media live broadcast system based on MSE. In Journal of Physics: Conference Series (Vol. 1168, No. 3, p. 032071). IOP Publishing.

[13] Liang, X., & Chen, H. (2019, August). HDSO: A High-Performance Dynamic Service Orchestration Algorithm in Hybrid NFV Networks. In 2019 IEEE 21st International Conference on High Performance Computing and Communications; IEEE 17th International Conference on Smart City; IEEE 5th International Conference on Data Science and Systems (HPCC/SmartCity/DSS) (pp. 782-787). IEEE.

[14] Chen, H., Shen, Z., Wang, Y. and Xu, J., 2024. Threat Detection Driven by Artificial Intelligence: Enhancing Cybersecurity with Machine Learning Algorithms.

[15] Xu, J., Chen, H., Xiao, X., Zhao, M., Liu, B. (2025). Gesture Object Detection and Recognition Based on YOLOv11.Applied and Computational Engineering,133,81-89.

Downloads

Published

Versions

- 2025-07-01 (2)

- 2025-03-18 (1)

How to Cite

Issue

Section

ARK

License

Copyright (c) 2025 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.