VGCN: An Enhanced Graph Convolutional Network Model for Text Classification

DOI:

https://doi.org/10.5281/zenodo.13118430ARK:

https://n2t.net/ark:/40704/JIEAS.v2n4a16References:

22Keywords:

Graph Convolutional Neural Networks, Text Classification, Variational StructureAbstract

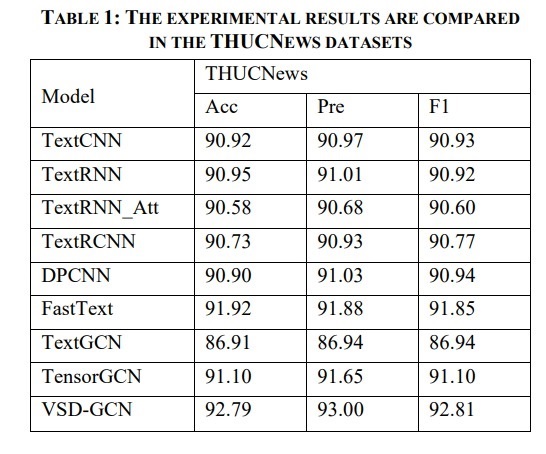

The rapid growth of textual data has made text classification a critical research area in the field of Natural Language Processing. Traditional Graph Convolutional Networks (GCN) face challenges in text classification due to the limitations of fixed edge weights and the inability to explore high-dimensional graph structural features, which hampers effective semantic information capture. To address these issues, we propose an improved graph convolutional network model, Variational Graph Convolutional Network (VGCN). In VGCN, we design a variational structure within the GCN to learn latent representations of textual data, forming a continuous, high-dimensional graph structure distribution. This feature representation captures complex graph structural features, thereby enhancing semantic information extraction and improving classification performance. Experimental results on datasets such as THUCNews and Toutiao demonstrate that VGCN significantly outperforms traditional models like CNN, RNN, and GCN in text classification tasks. These findings underscore the effectiveness and superiority of VGCN in capturing semantic information in texts.

Downloads

Metrics

References

Li Fangfang, Su Puzhen, Duan Junwen, Zhang Shichao, & Mao Xingliang.(2023). Multi-granularity information relationship enhancement for multi-label text classification. Journal of Software, 34(12), 5686-5703.

Tang Qinting. (2020). Research and Implementation of Deep Learning Based Online News Text Classification System Master (Dissertation, Beijing University of Posts and Telecommunications). Master https://link.cnki.net/doi/10.26969/d.cnki.gbydu.2020.002057 doi:10.26969/d.cnki.gbydu.2020.002057.

Wang, X., Li, F., Zhang, Z., Xu, G., Zhang, J., & Sun, X. (2021). A unified position-aware convolutional neural network for aspect based sentiment analysis. Neurocomputing, 450, 91-103.

Minaee, S., Kalchbrenner, N., Cambria, E., Nikzad, N., Chenaghlu, M., & Gao, J. (2021). Deep learning--based text classification: a comprehensive review. ACM computing surveys (CSUR), 54(3), 1-40.

Li, Q., Peng, H., Li, J., Xia, C., Yang, R., Sun, L., ... & He, L. (2022). A survey on text classification: From traditional to deep learning. ACM Transactions on Intelligent Systems and Technology (TIST), 13(2), 1-41.

Sun Hong, Lu Xinrong, Xu Guanghui, Huang XueYang & Ren Libo. (2023). Fusing semantic and syntactic dependency analysis for graph convolutional news text classification. Journal of Chinese Information (07), 91-101.

Wang, J., Liang, J., Cui, J., & Liang, J. (2021). Semi-supervised learning with mixed-order graph convolutional networks. Information Sciences, 573, 171-181.

Yao, L., Mao, C., & Luo, Y. (2019, July). Graph convolutional networks for text classification. In Proceedings of the AAAI conference on artificial intelligence (Vol. 33, No. 01, pp. 7370-7377).

Zhang, L., Jiang, L., Li, C., & Kong, G. (2016). Two feature weighting approaches for naive Bayes text classifiers. Knowledge-Based Systems, 100, 137-144.

Haddoud, M., Mokhtari, A., Lecroq, T., & Abdeddaïm, S. (2016). Combining supervised term-weighting metrics for SVM text classification with extended term representation. Knowledge and Information Systems, 49, 909-931.

Abu Alfeilat, H. A., Hassanat, A. B., Lasassmeh, O., Tarawneh, A. S., Alhasanat, M. B., Eyal Salman, H. S., & Prasath, V. S. (2019). Effects of distance measure choice on k-nearest neighbor classifier performance: a review. Big data, 7(4), 221-248.

Kim, H., & Jeong, Y. S. (2019). Sentiment classification using convolutional neural networks. Applied Sciences, 9(11), 2347.

Zhang, Y., Liu, Q., & Song, L. (2018). Sentence-state LSTM for text representation. arXiv preprint arXiv:1805.02474.

Liu, P., Qiu, X., & Huang, X. (2016). Recurrent neural network for text classification with multi-task learning. arXiv preprint arXiv:1605.05101.

Johnson, R., & Zhang, T. (2017, July). Deep pyramid convolutional neural networks for text categorization. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (pp. 562-570).

Teng Jinbao, Kong Weiwei, Tian Qiaoxin, Wang Zhaoqian, & Li Long. (2021). A multi-channel attention mechanism text classification model based on CNN and LSTM. Journal of Computer Engineering & Applications, 57(23).

Liu, X., You, X., Zhang, X., Wu, J., & Lv, P. (2020, April). Tensor graph convolutional networks for text classification. In Proceedings of the AAAI conference on artificial intelligence (Vol. 34, No. 05, pp. 8409-8416).

Ding, K., Wang, J., Li, J., Li, D., & Liu, H. (2020). Be more with less: Hypergraph attention networks for inductive text classification. arXiv preprint arXiv:2011.00387.

Pennington, J., Socher, R., & Manning, C. D. (2014, October). Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP) (pp. 1532-1543).

Joulin, A., Grave, E., Bojanowski, P., & Mikolov, T. (2016). Bag of tricks for efficient text classification. arXiv preprint arXiv:1607.01759.

Zhou, P., Shi, W., Tian, J., Qi, Z., Li, B., Hao, H., & Xu, B. (2016, August). Attention-based bidirectional long short-term memory networks for relation classification. In Proceedings of the 54th annual meeting of the association for computational linguistics (volume 2: Short papers) (pp. 207-212).

Lyu, S., & Liu, J. (2021). Convolutional recurrent neural networks for text classification. Journal of Database Management (JDM), 32(4), 65-82.

Downloads

Published

How to Cite

Issue

Section

ARK

License

Copyright (c) 2024 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.