Exploring Bias in NLP Models: Analyzing the Impact of Training Data on Fairness and Equity

DOI:

https://doi.org/10.5281/zenodo.13845132ARK:

https://n2t.net/ark:/40704/JIEAS.v2n5a04References:

16Keywords:

Natural Language Processing (NLP), Human-computer Interaction, Bias in NLP Models, Fairness in AI, Training Data Selection, Stereotype Reinforcement, Marginalized Communities, Preprocessing Techniques, Mitigation Strategies, Responsible AI PracticesAbstract

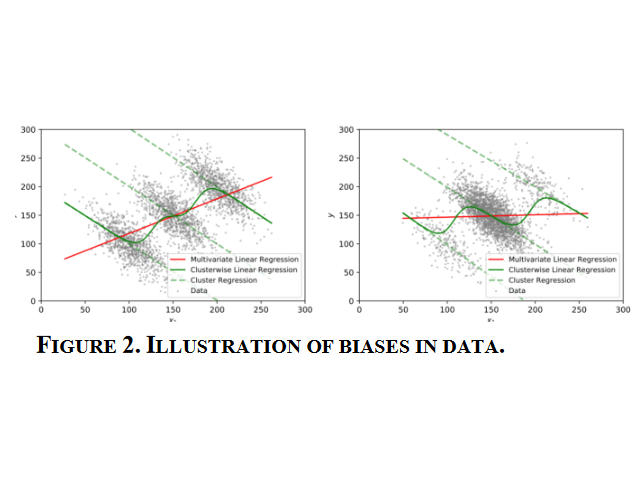

Natural Language Processing (NLP) technologies have revolutionized human-computer interactions, allowing machines to understand and generate human language with unparalleled precision. This advancement has created numerous applications, from virtual assistants and chatbots to sentiment analysis and automated content generation. As more models become incorporated into systems affecting people's lives--for instance hiring algorithms, judicial decision-making tools, or social media content moderation--they raise serious concerns over bias and fairness. Examining the factors contributing to bias within NLP models is of utmost importance, specifically the influence of training data on their performance. Training data selection and curation have an enormous influence on a model's ability to perform equally across diverse demographic groups; biased selection may reinforce existing stereotypes while poor representation may lead to underperformance for marginalized communities. Preprocessing techniques such as tokenization and normalization may inadvertently perpetuate biases if not applied with care. Through an in-depth literature review and case studies, this paper explores the sources of bias within NLP systems. Furthermore, various mitigation strategies for mitigating such biases to promote fairness within these applications are proposed in order to increase equity. [1] By identifying best practices for data curation, employing fairness-aware algorithms, and setting robust evaluation metrics, our aim is to develop NLP technologies that are not only effective but also just and equitable. The findings highlight the significance of responsible AI practices while encouraging developers and researchers alike to prioritize fairness as an essential aspect of NLP system design.

Downloads

Metrics

References

Barocas, S., & Selbst, A. D. (2016). Big data’s disparate impact. California Law Review, 104(3), 671-732.

Binns, R. (2018). Fairness in machine learning: Lessons from political philosophy. In Proceedings of the 2018 Conference on Fairness, Accountability, and Transparency (pp. 149-158).

Li, W. (2024). Transforming Logistics with Innovative Interaction Design and Digital UX Solutions. Journal of Computer Technology and Applied Mathematics, 1(3), 91-96.

Li, W. (2024). User-Centered Design for Diversity: Human-Computer Interaction (HCI) Approaches to Serve Vulnerable Communities. Journal of Computer Technology and Applied Mathematics, 1(3), 85-90.

Bolukbasi, T., et al. (2016). Man is to computer programmer as woman is to homemaker? Debiasing word embeddings. Advances in Neural Information Processing Systems, 29, 4349-4357.

Mehrabi, N., et al. (2019). A survey on bias and fairness in machine learning. ACM Computing Surveys, 54(6), 1-35.

O’Neil, C. (2016). Weapons of math destruction: How big data increases inequality and threatens democracy. Crown Publishing Group.

Zhang, B., et al. (2018). Mitigating unwanted biases with adversarial learning. In Proceedings of the 35th International Conference on Machine Learning (Vol. 80, pp. 1625-1634).

Zou, J. Y., & Schiebinger, L. (2018). AI can be sexist and racist—it’s time to make it fair. Nature, 559(7714), 324-326.

Yan, H., Xiao, J., Zhang, B., Yang, L., & Qu, P. (2024). The Application of Natural Language Processing Technology in the Era of Big Data. Journal of Industrial Engineering and Applied Science, 2(3), 20-27.

Zhang, B., Xiao, J., Yan, H., Yang, L., & Qu, P. (2024). Review of NLP Applications in the Field of Text Sentiment Analysis. Journal of Industrial Engineering and Applied Science, 2(3), 28-34.

Xiao, J., Zhang, B., Zhao, Y., Wu, J., & Qu, P. (2024). Application of Large Language Models in Personalized Advertising Recommendation Systems. Journal of Industrial Engineering and Applied Science, 2(4), 132-142.

Zhao, Y., Qu, P., Xiao, J., Wu, J., & Zhang, B. (2024). Optimizing Telehealth Services with LILM-Driven Conversational Agents: An HCI Evaluation. Journal of Industrial Engineering and Applied Science, 2(4), 122-131.

Caliskan, A., Bryson, J. J., & Narayanan, A. (2017). Semantics derived automatically from language corpora necessarily contain human biases. Science, 356(6334), 183-186.

Dev, S., et al. (2019). Attacking the API: Exploring the impacts of training data on bias in NLP models. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (pp. 617-622).

Garg, N., et al. (2018). Word embeddings quantify 100 years of gender and ethnic stereotypes. Proceedings of the National Academy of Sciences, 115(16), E3635-E3644.

Downloads

Published

How to Cite

Issue

Section

ARK

License

Copyright (c) 2024 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.