Advances in Deep Reinforcement Learning for Computer Vision Applications

DOI:

https://doi.org/10.70393/6a69656173.323234ARK:

https://n2t.net/ark:/40704/JIEAS.v2n6a03Disciplines:

Computer ScienceSubjects:

Computer VisionReferences:

26Keywords:

Deep Reinforcement Learning, Computer Vision, Object Detection,Q-learningAbstract

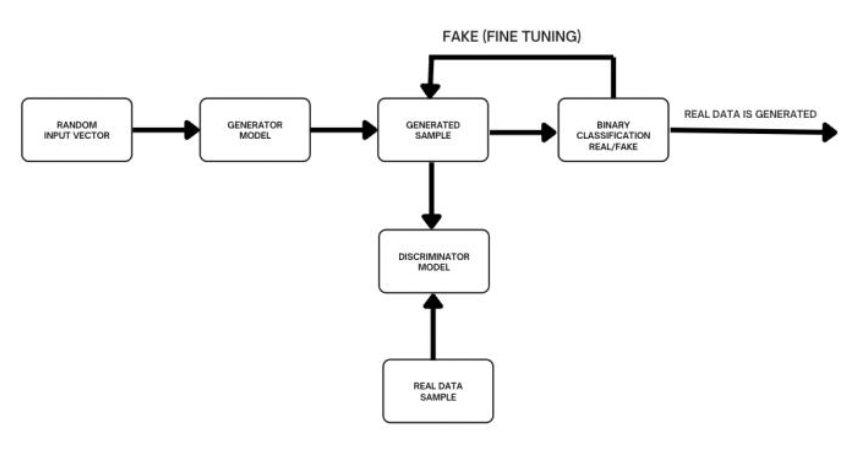

Deep Reinforcement Learning (DRL) has become very popular for computer vision (CV), solving mostly visually complex environments with decision making and dynamic adaption to different situations. This article provides an introduction to the basic concepts of deep reinforcement learning with a special focus on their applications in computer vision tasks, including challenging problems and emerging solutions. It reviews various DRL algorithms such as Q-learning, policy gradient methods, and Actor-Critic models, explaining much of the modifications that are done to make it work on high-dimensional visual problems. Different applications of DRL in major CV applications like object detection, image segmentation, target tracking and image generation are reviewed to demonstrate the power as well as limitations of DRL in practice. More importantly, the novel paradigms such as hierarchical policy learning, adaptive reward design multi-task reinforcement learning and domain adaptation can be viewed as promising premises to improve model efficiency and generalizability in multiple scenarios. Finally, this paper mentions a few of the existing challenges like computational power cost and sample efficiency; as well as future paths for enhancing that can widen devout reinforcement learning in computer vision. Through this comprehensive overview, we aim to shed light on the promising synergies between DRL and CV, while identifying key areas for future research and application.

Downloads

Metrics

References

Swaminathan, M., Bhatti, O. W., Guo, Y., Huang, E., & Akinwande, O. (2022). Bayesian learning for uncertainty quantification, optimization, and inverse design. IEEE Transactions on Microwave Theory and Techniques, 70(11), 4620-4634.

Garcia, F., & Rachelson, E. (2013). Markov decision processes. Markov Decision Processes in Artificial Intelligence, 1-38.

Sze, V., Chen, Y. H., Yang, T. J., & Emer, J. S. (2017). Efficient processing of deep neural networks: A tutorial and survey. Proceedings of the IEEE, 105(12), 2295-2329.

Yukun, S. (2019). Deep learning applications in the medical image recognition. American Journal of Computer Science and Technology, 2(2), 22-26.

Chauhan, R., Ghanshala, K. K., & Joshi, R. C. (2018, December). Convolutional neural network (CNN) for image detection and recognition. In 2018 first international conference on secure cyber computing and communication (ICSCCC) (pp. 278-282). IEEE.

Legenstein, R., Wilbert, N., & Wiskott, L. (2010). Reinforcement learning on slow features of high-dimensional input streams. PLoS computational biology, 6(8), e1000894.

Pan, J., Wang, X., Cheng, Y., & Yu, Q. (2018). Multisource transfer double DQN based on actor learning. IEEE transactions on neural networks and learning systems, 29(6), 2227-2238.

Gu, S., Lillicrap, T., Sutskever, I., & Levine, S. (2016, June). Continuous deep q-learning with model-based acceleration. In International conference on machine learning (pp. 2829-2838). PMLR.

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., & Klimov, O. (2017). Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347.

Sun, Y., Salami Pargoo, N., Jin, P., & Ortiz, J. (2024, October). Optimizing Autonomous Driving for Safety: A Human-Centric Approach with LLM-Enhanced RLHF. In Companion of the 2024 on ACM International Joint Conference on Pervasive and Ubiquitous Computing (pp. 76-80).

Cao, J., Xu, R., Lin, X., Qin, F., Peng, Y., & Shao, Y. (2023). Adaptive receptive field U-shaped temporal convolutional network for vulgar action segmentation. Neural Computing and Applications, 35(13), 9593-9606.

Chen, B., Qin, F., Shao, Y., Cao, J., Peng, Y., & Ge, R. (2023). Fine-grained imbalanced leukocyte classification with global-local attention transformer. Journal of King Saud University-Computer and Information Sciences, 35(8), 101661.

Jiang, L., Yang, X., Yu, C., Wu, Z., & Wang, Y. (2024, July). Advanced AI framework for enhanced detection and assessment of abdominal trauma: Integrating 3D segmentation with 2D CNN and RNN models. In 2024 3rd International Conference on Robotics, Artificial Intelligence and Intelligent Control (RAIIC) (pp. 337-340). IEEE.

Gu, Y., Liu, B., Zhang, T., Sha, X., & Chen, S. (2024). Architectural Synergies in Bi-Modal and Bi-Contrastive Learning. IEEE Access.

Plaat, A., Kosters, W., & Preuss, M. (2020). Deep model-based reinforcement learning for high-dimensional problems, a survey. arXiv preprint arXiv:2008.05598.

Rao, N., Chu, S. L., Jing, Z., Kuang, H., Tang, Y., & Dong, Z. (2022, June). Exploring information retrieval for personalized teaching support. In International Conference on Human-Computer Interaction (pp. 191-197). Cham: Springer Nature Switzerland.

Sami, H., Otrok, H., Bentahar, J., & Mourad, A. (2021). AI-based resource provisioning of IoE services in 6G: A deep reinforcement learning approach. IEEE Transactions on Network and Service Management, 18(3), 3527-3540.

He, Z. (2024). An Empirical Analysis of the Factors Influencing Commodity Housing Prices in China Based on Econometrics. In INTERNET FINANCE AND DIGITAL ECONOMY: Advances in Digital Economy and Data Analysis Technology The 2nd International Conference on Internet Finance and Digital Economy, Kuala Lumpur, Malaysia, 19–21 August 2022 (pp. 99-112).

Yu, P., Cui, V. Y., & Guan, J. (2021, March). Text classification by using natural language processing. In Journal of Physics: Conference Series (Vol. 1802, No. 4, p. 042010). IOP Publishing.

Lin, W. (2024). A Review of Multimodal Interaction Technologies in Virtual Meetings. Journal of Computer Technology and Applied Mathematics, 1(4), 60-68.

Lin, W. (2024). A Systematic Review of Computer Vision-Based Virtual Conference Assistants and Gesture Recognition. Journal of Computer Technology and Applied Mathematics, 1(4), 28-35.

Sun, Y., & Ortiz, J. (2024). Machine Learning-Driven Pedestrian Recognition and Behavior Prediction for Enhancing Public Safety in Smart Cities. Journal of Artificial Intelligence and Information, 1, 51-57.

Luo, M., Zhang, W., Song, T., Li, K., Zhu, H., Du, B., & Wen, H. (2021, January). Rebalancing expanding EV sharing systems with deep reinforcement learning. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence (pp. 1338-1344).

Luo, M., Du, B., Zhang, W., Song, T., Li, K., Zhu, H., ... & Wen, H. (2023). Fleet rebalancing for expanding shared e-Mobility systems: A multi-agent deep reinforcement learning approach. IEEE Transactions on Intelligent Transportation Systems, 24(4), 3868-3881.

Zhu, H., Luo, Y., Liu, Q., Fan, H., Song, T., Yu, C. W., & Du, B. (2019). Multistep flow prediction on car-sharing systems: A multi-graph convolutional neural network with attention mechanism. International Journal of Software Engineering and Knowledge Engineering, 29(11n12), 1727–1740.

Yaonian Zhong, Enhancing the Heat Dissipation Efficiency of Computing Units Within Autonomous Driving Systems and Electric Vehicles, J. World Journal of Innovation and Modern Technology, 2024, 7 (5): 100-104.

Downloads

Published

How to Cite

Issue

Section

ARK

License

Copyright (c) 2024 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.