Assessment Methods and Protection Strategies for Data Leakage Risks in Large Language Models

DOI:

https://doi.org/10.70393/6a69656173.323736ARK:

https://n2t.net/ark:/40704/JIEAS.v3n2a02Disciplines:

Artificial Intelligence TechnologySubjects:

Natural Language ProcessingReferences:

23Keywords:

Large Language Models, Data Leakage Protection, Security Assessment, Privacy-Preserving Machine LearningAbstract

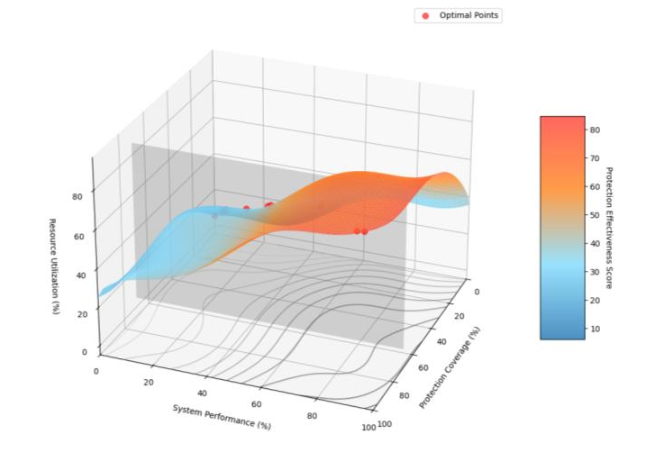

Large Language Models (LLMs) have demonstrated remarkable capabilities in natural language processing tasks, yet their inherent vulnerabilities to data leakage pose significant security and privacy risks. This paper presents a comprehensive analysis of assessment methods and protection strategies for addressing data leakage risks in LLMs. A systematic evaluation framework is proposed, incorporating multi-dimensional risk assessment models and quantitative metrics for vulnerability detection. The research examines various protection mechanisms across different stages of the LLM lifecycle, from data pre-processing to post-deployment monitoring. Through extensive analysis of protection techniques, the study reveals that integrated defense strategies combining gradient protection, query filtering, and output sanitization achieve optimal security outcomes, with risk reduction rates exceeding 95%. The implementation of these protection mechanisms demonstrates varying effectiveness across different operational scenarios, with performance impacts ranging from 8% to 18%. The research contributes to the field by establishing standardized evaluation criteria and proposing enhanced protection strategies that balance security requirements with system performance. The findings provide valuable insights for developing robust security frameworks in LLM deployments, while identifying critical areas for future research in adaptive defense mechanisms and scalable protection solutions.

Downloads

Metrics

References

[1] Das, B. C., Amini, M. H., & Wu, Y. (2024). Security and privacy challenges of large language models: A survey. ACM Computing Surveys.

[2] Balloccu, S., Schmidtová, P., Lango, M., & Dušek, O. (2024). Leak, cheat, repeat: Data contamination and evaluation malpractices in closed-source llms. arXiv preprint arXiv:2402.03927.

[3] Mathis, M., Blom, J. F., Nemecek, T., Bravin, E., Jeanneret, P., Daniel, O., & de Baan, L. (2022). Comparison of exemplary crop protection strategies in Swiss apple production: Multi-criteria assessment of pesticide use, ecotoxicological risks, environmental and economic impacts. Sustainable Production and Consumption, 31, 512-528.

[4] Kim, S., Yun, S., Lee, H., Gubri, M., Yoon, S., & Oh, S. J. (2024). Propile: Probing privacy leakage in large language models. Advances in Neural Information Processing Systems, 36.

[5] Stucki, A. O., Barton-Maclaren, T. S., Bhuller, Y., Henriquez, J. E., Henry, T. R., Hirn, C., ... & Clippinger, A. J. (2022). Use of new approach methodologies (NAMs) to meet regulatory requirements for the assessment of industrial chemicals and pesticides for effects on human health. Frontiers in Toxicology, 4, 964553.

[6] Yao, Y., Duan, J., Xu, K., Cai, Y., Sun, Z., & Zhang, Y. (2024). A survey on large language model (llm) security and privacy: The good, the bad, and the ugly. High-Confidence Computing, 100211.

[7] Zhang, X., Xu, H., Ba, Z., Wang, Z., Hong, Y., Liu, J., ... & Ren, K. (2024). Privacyasst: Safeguarding user privacy in tool-using large language model agents. IEEE Transactions on Dependable and Secure Computing.

[8] Nahar, J., Hossain, M. S., Rahman, M. M., & Hossain, M. A. (2024). Advanced Predictive Analytics For Comprehensive Risk Assessment In Financial Markets: Strategic Applications And Sector-Wide Implications. Global Mainstream Journal of Business, Economics, Development & Project Management, 3(4), 39-53.

[9] Shen, Q., Zhang, Y., & Xi, Y. (2024). Deep Learning-Based Investment Risk Assessment Model for Distributed Photovoltaic Projects. Journal of Advanced Computing Systems, 4(3), 31-46.

[10] Chen, J., Zhang, Y., & Wang, S. (2024). Deep Reinforcement Learning-Based Optimization for IC Layout Design Rule Verification. Journal of Advanced Computing Systems, 4(3), 16-30.

[11] Ju, C. (2023). A Machine Learning Approach to Supply Chain Vulnerability Early Warning System: Evidence from US Semiconductor Industry. Journal of Advanced Computing Systems, 3(11), 21-35.

[12] Çakmakçı, R., Salık, M. A., & Çakmakçı, S. (2023). Assessment and principles of environmentally sustainable food and agriculture systems. Agriculture, 13(5), 1073.

[13] Ju, C., & Ma, X. (2024). Real-time Cross-border Payment Fraud Detection Using Temporal Graph Neural Networks: A Deep Learning Approach. International Journal of Computer and Information System (IJCIS), 5(1), 103-114.

[14] Chen, H., Shen, Z., Wang, Y. and Xu, J., 2024. Threat Detection Driven by Artificial Intelligence: Enhancing Cybersecurity with Machine Learning Algorithms.

[15] Liang, X., & Chen, H. (2019, July). A SDN-Based Hierarchical Authentication Mechanism for IPv6 Address. In 2019 IEEE International Conference on Intelligence and Security Informatics (ISI) (pp. 225-225). IEEE.

[16] Pasino, A., De Angeli, S., Battista, U., Ottonello, D., & Clematis, A. (2021). A review of single and multi-hazard risk assessment approaches for critical infrastructures protection. International Journal of Safety and Security Engineering, 11(4), 305-318.

[17] Liang, X., & Chen, H. (2019, August). HDSO: A High-Performance Dynamic Service Orchestration Algorithm in Hybrid NFV Networks. In 2019 IEEE 21st International Conference on High Performance Computing and Communications; IEEE 17th International Conference on Smart City; IEEE 5th International Conference on Data Science and Systems (HPCC/SmartCity/DSS) (pp. 782-787). IEEE.

[18] Chen, H., & Bian, J. (2019, February). Streaming media live broadcast system based on MSE. In Journal of Physics: Conference Series (Vol. 1168, No. 3, p. 032071). IOP Publishing.

[19] Xu, J., Chen, H., Xiao, X., Zhao, M., Liu, B. (2025). Gesture Object Detection and Recognition Based on YOLOv11.Applied and Computational Engineering,133,81-89.

[20] Khan, S., Naushad, M., Lima, E. C., Zhang, S., Shaheen, S. M., & Rinklebe, J. (2021). Global soil pollution by toxic elements: Current status and future perspectives on the risk assessment and remediation strategies–A review. Journal of Hazardous Materials, 417, 126039.

[21] Weidinger, L., Mellor, J., Rauh, M., Griffin, C., Uesato, J., Huang, P. S., ... & Gabriel, I. (2021). Ethical and social risks of harm from language models. arXiv preprint arXiv:2112.04359.

[22] Lukas, N., Salem, A., Sim, R., Tople, S., Wutschitz, L., & Zanella-Béguelin, S. (2023, May). Analyzing leakage of personally identifiable information in language models. In 2023 IEEE Symposium on Security and Privacy (SP) (pp. 346-363). IEEE.

[23] Huang, J., Shao, H., & Chang, K. C. C. (2022). Are large pre-trained language models leaking your personal information?. arXiv preprint arXiv:2205.12628.

Downloads

Published

Versions

- 2025-07-27 (2)

- 2025-04-01 (1)

How to Cite

Issue

Section

ARK

License

Copyright (c) 2025 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.