Research on an Automated Data Insight Generation Method Based on Large Language Models

DOI:

https://doi.org/10.70393/6a69656173.333436ARK:

https://n2t.net/ark:/40704/JIEAS.v3n6a02Disciplines:

Artificial Intelligence TechnologySubjects:

Natural Language ProcessingReferences:

18Keywords:

Large Language Models, Automated Data Insights, Deep Learning, Natural Language Processing, Data Mining, Machine LearningAbstract

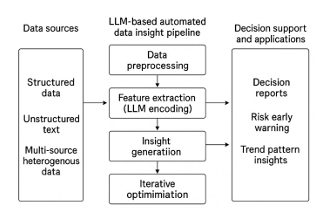

This study aims to explore automated data insight generation methods based on large language models (LLMs), and systematically analyzes the application potential and challenges of LLMs in the field of data insights. Starting from an overview of LLMs and their development, it expounds the theoretical foundations and technological evolution of LLMs in natural language processing. Then, the research method and experimental scheme are elaborately designed, and empirical studies are conducted using deep learning frameworks and large-scale datasets. Experimental results show that automated data insight generation methods based on LLMs exhibit significant advantages in data understanding, pattern recognition, and information extraction, effectively improving the accuracy and efficiency of data insights. Through multi-dimensional analysis of the experimental results, the study reveals the unique advantages and limitations of this method in handling complex data structures and high-dimensional data. Furthermore, the study discusses the theoretical mechanisms and technical bottlenecks behind the results, and proposes concrete strategies for optimizing model performance and expanding application scenarios. Finally, this paper summarizes the research findings and looks ahead to future research directions, with the aim of providing theoretical support and technical references for the further development of automated data insight generation.

Downloads

Metrics

References

[1] Zhou, Y., Zhang, J., Chen, G., Shen, J., & Cheng, Y. (2024). Less is more: Vision representation compression for efficient video generation with large language models.

[2] Tao, M. (2022). Research on classification tree expansion methods based on language models. Liaoning Technical University.

[3] Zhao, P., Liu, X., Su, X., Wu, D., Li, Z., Kang, K., ... & Zhu, A. (2025). Probabilistic contingent planning based on hierarchical task network for high-quality plans. Algorithms, 18(4), 214.

[4] Ren, L. (2025). Leveraging large language models for anomaly event early warning in financial systems. European Journal of AI, Computing & Informatics, 1(3), 69–76.

[5] Liang, X., He, Y., Xia, Y., Song, X., Wang, J., Tao, M., ... & Shi, T. (2024). Self-evolving agents with reflective and memory-augmented abilities. arXiv preprint arXiv:2409.00872.

[6] Guo, Q., & Zhu, Y. (2023). Broad layout and emphasis on application: New progress of generative large language models. Journalism Lover, (8), 21–25.

[7] Wu, S., Fu, L., Chang, R., Wei, Y., Zhang, Y., Wang, Z., ... & Li, K. (2025). Warehouse robot task scheduling based on reinforcement learning to maximize operational efficiency. Authorea Preprints.

[8] Tian, Y., Yang, Z., Liu, C., Su, Y., Hong, Z., Gong, Z., & Xu, J. (2025). CenterMamba-SAM: Center-prioritized scanning and temporal prototypes for brain lesion segmentation. arXiv preprint arXiv:2511.01243.

[9] Liu, Z. (2025). Reinforcement learning for prompt optimization in language models: A comprehensive survey of methods, representations, and evaluation challenges. ICCK Transactions on Emerging Topics in Artificial Intelligence, 2(4), 173–181.

[10] Ren, L. (2025). Boosting algorithm optimization technology for ensemble learning in small sample fraud detection. Academic Journal of Engineering and Technology Science, 8(4), 53–60.

[11] Zhai, Y. (2023). Research on keyword generation methods based on text structure information enhancement and pretrained language models. Nanchang University.

[12] Ren, L. (2025). Causal modeling for fraud detection: Enhancing financial security with interpretable AI. European Journal of Business, Economics & Management, 1(4), 94–104.

[13] Shen, Y. (2022). Research on data completion methods based on generative adversarial networks. People’s Public Security University of China.

[14] Fang, L. (2025). AI-powered translation and the reframing of cultural concepts in language education. Academic Journal of Sociology and Management, 3(3), 36–40.

[15] Zhou, Y., Shen, J., & Cheng, Y. (2025). Weak to strong generalization for large language models with multi-capabilities. In The Thirteenth International Conference on Learning Representations.

[16] Xiong, M., & Chi, X. (2023). On the security of generative large language model applications—Taking ChatGPT as an example. Shandong Social Sciences, (5), 79–90.

[17] Ren, L. (2025). Reinforcement learning for prioritizing anti-money laundering case reviews based on dynamic risk assessment. Journal of Economic Theory and Business Management, 2(5), 1–6.

[18] He, Y., Wang, J., Li, K., Wang, Y., Sun, L., Yin, J., ... & Wang, X. (2025). Enhancing intent understanding for ambiguous prompts through human-machine co-adaptation. arXiv preprint arXiv:2501.15167.

Downloads

Published

How to Cite

Issue

Section

ARK

License

Copyright (c) 2025 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.