Assessment Methods and Protection Strategies for Data Leakage Risks in Large Language Models

DOI:

https://doi.org/10.70393/6a69656173.323736ARK:

https://n2t.net/ark:/40704/JIEAS.v3n2a02Disciplines:

Artificial Intelligence TechnologySubjects:

Natural Language ProcessingReferences:

1Keywords:

Large Language Models, Data Leakage Protection, Security Assessment, Privacy-Preserving Machine LearningAbstract

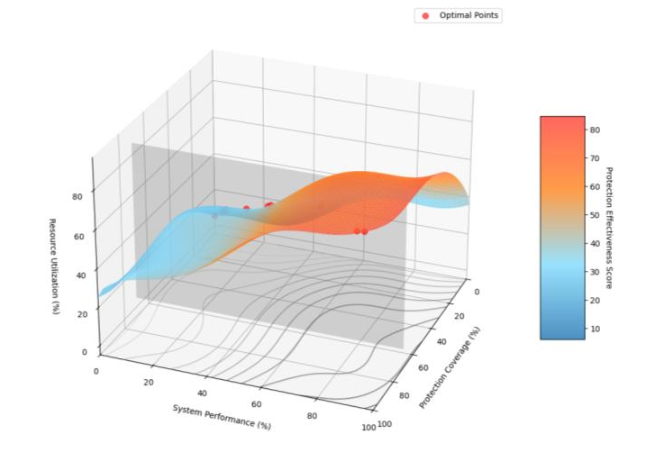

Large Language Models (LLMs) have demonstrated remarkable capabilities in natural language processing tasks, yet their inherent vulnerabilities to data leakage pose significant security and privacy risks. This paper presents a comprehensive analysis of assessment methods and protection strategies for addressing data leakage risks in LLMs. A systematic evaluation framework is proposed, incorporating multi-dimensional risk assessment models and quantitative metrics for vulnerability detection. The research examines various protection mechanisms across different stages of the LLM lifecycle, from data pre-processing to post-deployment monitoring. Through extensive analysis of protection techniques, the study reveals that integrated defense strategies combining gradient protection, query filtering, and output sanitization achieve optimal security outcomes, with risk reduction rates exceeding 95%. The implementation of these protection mechanisms demonstrates varying effectiveness across different operational scenarios, with performance impacts ranging from 8% to 18%. The research contributes to the field by establishing standardized evaluation criteria and proposing enhanced protection strategies that balance security requirements with system performance. The findings provide valuable insights for developing robust security frameworks in LLM deployments, while identifying critical areas for future research in adaptive defense mechanisms and scalable protection solutions.

Downloads

Metrics

Published

Versions

- 2025-07-27 (2)

- 2025-04-01 (1)

How to Cite

Issue

Section

ARK

License

Copyright (c) 2025 The author retains copyright and grants the journal the right of first publication.

This work is licensed under a Creative Commons Attribution 4.0 International License.